Recent Content:

Exploiting Password Weaknesses in Physical Security

In which I channel the spirit of my eighth academic cousin thrice removed, Richard Feynman.

Digital spin locks like the Kaba Mas X-09 and X-10 are very common for high security applications like vault doors. US General Services Administration approval means that they are nearly ubiquitous in securing government filing cabinets that contain documents that are classified secret, as well as securing the doors to laboratories in which secret code is written and stored. Strolling through the hallways of any Department of Defense facility or government contractor’s office, one is likely to see many of these on the doors:

|

| Kaba Mas X-09 |

These types of locks are very sophisticated: they are self-powered by rotating the dial and they have a digital printout on the top of the code as it is being entered. Security features to thwart automated brute force attack include random jumps in the numbers as the dial is being turned, algorithms for detecting “non-human” rotations of the dial, and long timeouts for successive failed attempts. These types of locks are usually configured to accept a six-digit code that is input as a sequence of three numbers from 0 to 99. That means there are $math$10^6 = 100^3 = 1,000,000$math$ possible combinations. With a four minute timeout, brute forcing all of the combinations would take almost eight years!

Humans are generally bad at remembering long sequences of numbers, and in most facilities the combinations to these locks are changed frequently. A single employee might have to memorize dozens of different combinations (one for each safe, door, etc.). Therefore, as a mnemonic, the security officers who set the codes often use six-letter words which are translated into codes using the mapping from a phone keypad. In fact, one will almost always see a magnet or sticker like this right next to the lock:

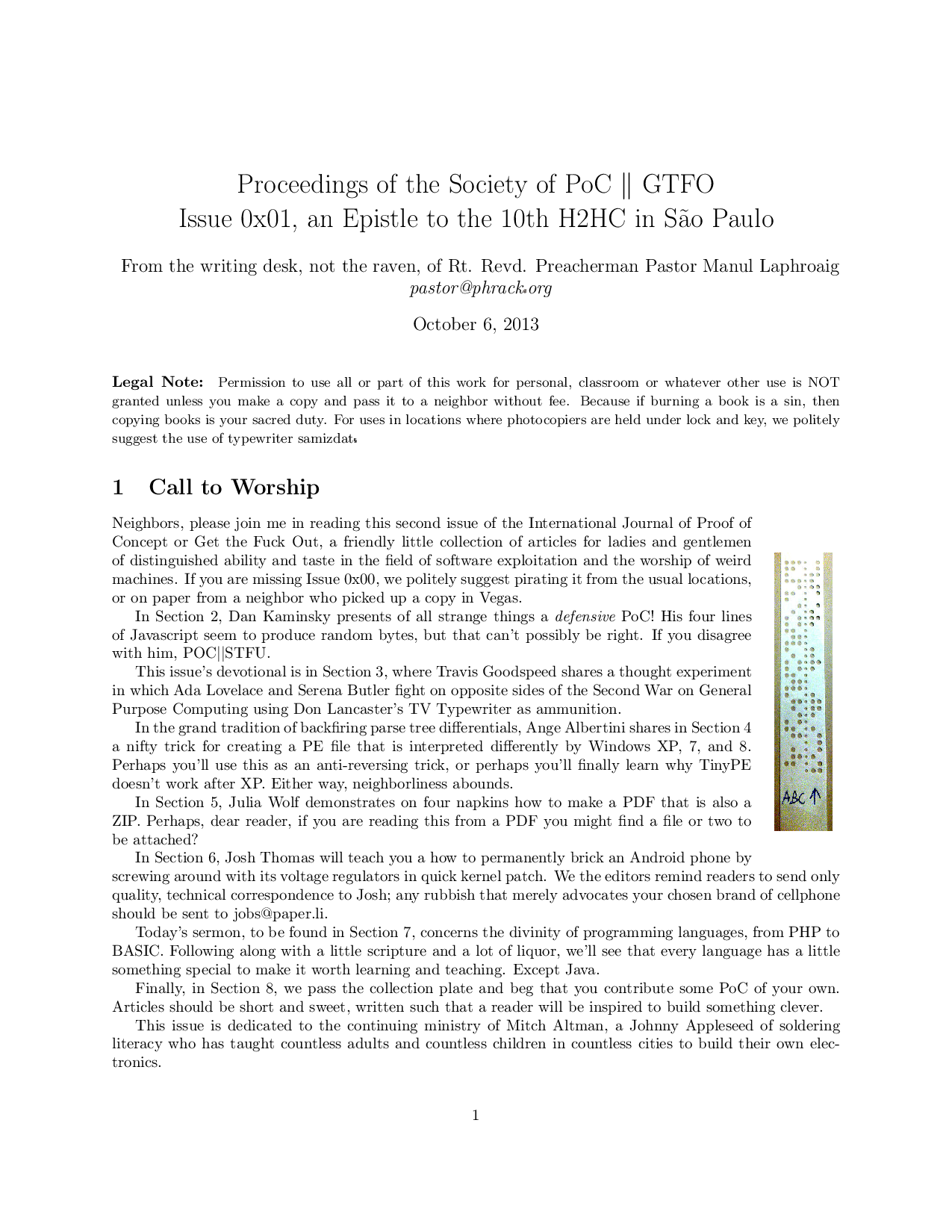

For example, the word “HACKER” would equate to the code 42-25-37.

| 1 | 2 abc | 3 def |

| 4 ghi | 5 jkl | 6 mno |

| 7 pqrs | 8 tuv | 9 wxyz |

| 0 |

And therein lies a vulnerability. First of all, using a phone dial pad for the mapping from letters to numbers means that the numbers zero and one will never be used! This means the number of potential codes to test has been decreased from one million to $math$8^6 = 262,144$math$. That’s a decrease of almost 75%! But we can do even better.

Next, let’s assume that the code word is a six-letter English word that occurs in the dictionary. Looking at /usr/share/dict/words, there are only 17,706 six-letter English words that have direct mappings using a phone keypad. That’s much lower than the 262,144 possible codes without zero and one, which means that we have reduced the number of possible codes to brute force by over 98% from the original! For example, the code 55-57-59 doesn’t appear to correspond to any words in the dictionary. That’s likely because almost all words need at least one vowel, and the phone keys 5, 7, and 9 don’t provide any vowels. Furthermore, many of those 17,706 words actually map to the same code. For example, the words “DOSAGE”, ”ENRAGE”, ”FORAGE”, and ”FORBID” all map to the code 36-72-43. If we just count the number of distinct codes generated by those six-letter English words, we get only 14,684, a reduction of almost 99%! With a four minute failure timeout, brute forcing those 14,684 codes would only take about forty days.

But wait, there’s more!

Not all letters occur in English words with the same frequency; you’re much more likely to find the letter ‘e’ in a randomly chosen word than the letter ‘x’. For example, almost 10% of the six-letter English words in the dictionary end in the code “37”, just like in our example word ”HACKER”. Therefore, if we cleverly order our brute force approach to try codes that occur most frequently in English words, then we can increase our chances of cracking the code quicker.

I sorted all of the 14,684 distinct codes in decreasing order according to the number of words that map to that code. If a code word is chosen uniformly from the set of all six-letter English words, then this ordering will maximize our chances of cracking the code quickest. In fact, after fewer than 250 attempts, we will have cracked the code with about 5% probability. In other words, with only about half a day of access to the lock, a brute force attempt using this ordering will have a 5% chance of success. In contrast, if the lock’s code were instead set using a randomly generated six-digit number, it would require on average 50,000 attempts (~5 months) to crack it with 5% probability. On the other hand, if the code was set from a six-letter English word using a phone keypad mapping, the lock can be cracked with 50% probability after about 16 days.

Codes are not usually changed on a month-to-month frequency, so an adversary with intermittent access to the lock (e.g., after hours) could quite conceivably crack it before the code were changed.

If there exists an additional vulnerability that allows the attacker to detect whether each successive two-digit subcode is correct before entering the next two-digit subcode, then the expected value for the length of time required to crack the code is on the order of minutes.

So what can we do about it? The most obvious solution is to simply choose codes randomly from the set of all possible one million 6-digit numbers. If we really do want to have a mnemonic, though, we could do a lot better by using a letter-to-number mapping that makes use of zeros and ones, and also produces a relatively even distribution of codes from English words. Here is one such example of a mapping:

| 1 a | 2 e | 3 br |

| 4 co | 5 dnq | 6 ip |

| 7 lmjz | 8 hkt | 9 su |

| 0 fgvwxy |

It’s not necessarily the best one, though. Finding the optimal mapping—the one that ensures a uniform distribution across all six-digit code for six-letter English dictionary words—is actually an instance of the multiple knapsack problem (a variant known as the multiple subset sum problem) and is therefore NP-Complete, and likely intractable for problems even as “small” as this one. Let $math$L = \{a, b, \ldots, z\}$math$ be the set of letters in the alphabet and let $math$f : L \rightarrow \mathbb{N}$math$ be a function mapping letters to their frequency among six-letter words in the dictionary. Then we can relax the problem to the minimization of the mean squared error over the summed frequencies of the letters mapped to each number: Find a function $math$t : L \rightarrow \{0, \ldots, 9\}$math$ that maps letters to numbers such that $equation$\frac{1}{10}\sum_{i=0}^9 \left(\sum_{\ell \in t^{-1}(i)} \left(f(\ell) - \frac{\sum_{\ell \in L} f(\ell)}{10}\right)\right)^2$equation$ is minimized. The total number of legal mappings, $math$t$math$, is $math$10^{26} = 100$math$ million billion billion. With that said, the example mapping above will be at least a million billion billion times more secure than using a phone keypad mapping!

The moral of the story here isn’t that these locks are inherently vulnerable; if used properly, they are in fact incredibly secure. The takeaway is that these locks are only as secure as the codes humans choose to assign to them. Using a phone keypad mapping on six-letter English dictionary words is the physical security equivalent of a website arbitrarily limiting passwords to eight characters.

A couple days ago, Dominic Spill and Michael Ossman presented an interesting talk at Shmoocon on using specially crafted error correcting codes to have unambiguous encapsulation, preventing attacks like “Packet in Packet.” This appears to be the culmination of some work that was first proposed to the LANGSEC mailing list back in March. The underlying problem that they are trying to address is that the physical layer is almost always error-prone: bits often get flipped. The data link layer addresses this by using error-correcting codes: methods of encoding the bits to be transmitted such that certain types of errors can be caught and corrected, at the expense of some additional bandwidth overhead.

Packet in Packet Attacks

If a sufficient number of bit-errors occur, however, error correcting codes can still fail to correct or even detect an error. Protocols usually address this by adding a checksum in the packet header/preamble. To illustrate this, let’s consider a made-up link layer protocol in which there is a header that starts with the magic number 0x1337 followed by a checksum and the message body. If a detectable but uncorrectable error occurs, then the packet can be dropped. If an undetected bit-error occurs in the body, then the checksum will fail and the packet will be dropped. If an undetected error occurs in the header, then either the magic will be broken and it will fail to be detected as a frame or the checksum will fail, in both cases causing the packet to be dropped. The one exception to this latter case is the basis for Packet in Packet attacks: If we were to start the message body with 0x1337 followed by a checksum and our desired body, then the link layer protocol will skip over the corrupted header and think that the actual frame starts at the beginning of our hand-crafted body! In effect, we have embedded a complete packet inside the body of another. It turns out that if we transmit this carefully crafted packet-in-a-packet a bunch of times, then eventually (in what turns out to be a reasonable amount of time) a bit-error will occur causing our payload to be interpreted as the real link layer frame. Attacks like this have been demonstrated on real data link layer protocols like IEEE 802.15.4.

Isolated Complementary Binary Linear Block Codes

Spill and Ossman propose using a special error correcting code that has a property they call “isolation:” the code can be split into multiple subcodes that have additional error-correcting properties between them. One of the subcodes could be used strictly for bits of the header/preamble, while another subcode could be used strictly for the body. This provides additional error correcting capability specifically for cases like that which the attack above exploits, without increasing the bandwidth overhead of the code. They call this family of codes “Isolated Complementary Binary Linear Block Codes” (ICBLBC).

As an example, consider the following eight code words:

00000 | 00011 | 00101 | 01001 |

01110 | 10110 | 11010 | 11100 |

Note that each code word differs from the others by at least two bits. This means that we can detect single-bit errors. For example, if 01110 is corrupted in the physical layer to 01010, then we can immediately know that an error occurred because that code does not exist in our set of eight. If two or more bit-errors occur, then an error may go undetected, which is the case that Packet in Packet attacks exploit. This is exactly like the types of error correcting codes used in most data link layer protocols. The difference, however, is that this particular set of eight code words has an additional property: it is an isolated complementary binary linear block code. Let’s call the first row of codes “Subcode 1” and the second row of codes “Subcode 2.” Now, note that every code in Subcode 1 differs from every other code in Subcode 2 by at least three bits. That means that we can not only detect but also correct single-bit errors between the two codes, giving the two subcodes properties of a Hamming Code. If we encode the header/preamble of the packet using one subcode and the body using the other, then we can circumvent Packet in Packet attacks with little to no extra bandwidth overhead!

Generating the Codes

Spill and Ossman have provided some code that can enumerate these codes. Currently, they have reported discovery of 6-bit ICBLBCs with up to twenty-two codes in subcode 1 and two codes in subcode 2, or up to ten codes in each if they are divided evenly. While their implementation does work, it is limited by the fact that it essentially performs a brute-force search to discover them, causing them to investigate a hardware-based approach using FPGAs.

Out of curiosity, I wondered if this problem could be solved more efficiently using constraint reasoning. Therefore, we implemented a generator that encodes the ICBLBC constraints as a Satisfiability Modulo Theories problem and used DOCO (Digital Operatives’ proprietary constraint optimizer) to enumerate all feasible solution codes. Sure enough, our solver was able to almost instantaneously reproduce Spill and Ossman’s results, as well as enumerate codes of larger bit length that would likely be intractable to discover using brute force search. For example, in less than a second it was able to discover this 7-bit ICBLBC consisting of fifty codes in subcode 1 and two codes in subcode 2:

| Subcode 1 | Subcode 2 |

|---|---|

| 37, 118, 44, 21, 35, 28, 107, 61, 41, 25, 94, 13, 56, 84, 124, 31, 110, 117, 50, 76, 62, 49, 47, 81, 7, 19, 109, 87, 103, 22, 93, 82, 70, 26, 127, 115, 55, 52, 59, 73, 11, 91, 88, 67, 69, 42, 121, 14, 38, 122 | 0, 96 |

as well as this one with nineteen codes in each subcode:

| Subcode 1 | Subcode 2 |

|---|---|

| 23, 89, 17, 27, 9, 65, 51, 5, 83, 85, 0, 18, 80, 54, 36, 33, 113, 3, 116 | 76, 122, 61, 127, 103, 14, 107, 104, 79, 56, 109, 98, 28, 70, 94, 47, 110, 74, 42 |

This 8-bit ICBLBC was discovered in a matter of seconds and consists of eighty codes in one subcode:

| Subcode 1 | Subcode 2 |

|---|---|

| 1, 4, 7, 8, 13, 16, 19, 25, 30, 32, 35, 37, 41, 42, 44, 47, 49, 54, 56, 59, 61, 69, 76, 81, 84, 87, 88, 97, 100, 104, 109, 110, 112, 121, 122, 124, 127, 128, 131, 133, 137, 142, 145, 146, 148, 152, 155, 161, 162, 164, 168, 171, 173, 176, 179, 181, 185, 190, 193, 196, 199, 200, 205, 208, 211, 217, 218, 220, 223, 224, 227, 229, 233, 234, 236, 239, 241, 246, 248, 253 | 66, 75 |

I have reimplemented this approach using Microsoft’s “free” (as in beer) Z3 constraint solver and pushed it to a fork of Spill and Ossman’s project, available here. As always, feedback on this new approach is greatly appreciated.

With the recent and ongoing disclosures of what appear to be widespread Internet surveillance programs, the public is becoming increasingly aware of the privacy risks in sending plaintext E-mail. Even connecting to one’s E-mail service provider using a cryptographically secure protocol like HTTPS provides a false sense of security, because one cannot ensure the trust or privacy of any intermediary servers/connections used to route the message to its recipient. As such, there are many excellent tutorials—and even entire web campaigns—that empower average users to protect their online communications via free tools like OpenPGP.

I have been personally encrypting my E-mail for well over a decade, and, since day one, Digital Operatives has employed strong cryptography to protect all of its internal E-mail communications. This works extremely well, and, for all intents and purposes, is currently very secure. There are some downsides, however. The number one complaint about using public key cryptography to secure all E-mail communications is that there really isn’t a good way to search through the bodies of the E-mails in your inbox (since the message bodies are encrypted, a simple search for a term like “cat” or “meeting” won’t match any of the E-mails it otherwise should have). In fact, the second bug ever reported for the popular EnigMail GPG plugin for the Thunderbird mail client was a feature request asking for the ability to search through encrypted E-mail bodies. That bug was opened in 2003 … and it is still open today.

The trouble is that the decryption step is too computationally expensive to decrypt all of the message bodies on the fly during the search. The alternative would be to temporarily decrypt the message bodies of new E-mails as they arrive and add them to a search index. The trouble is that this invites a security vulnerability, since sensitive message data would therefore be included in the search index.

Given that over 90% of the E-mail in my inbox is encrypted, I decided to scratch my own itch and develop a solution to this problem. I took the second approach mentioned above: I incrementally build a search index to search across the encrypted message bodies. To mitigate the aforementioned security risk with this approach, I encrypt the entire search index using the same private key used to decrypt one’s E-mails. Therefore, the only risk would be if an adversary got access to one’s private key, but that of course would have even worse security implications since he or she could then read all of the original E-mails anyway.

My proof-of-concept solution is a tool called Magiic. Magiic Allows for GPG Indexing of IMAP on the Command-line. It is a Python script that uses GnuPG for encryption/decryption and Whoosh for full-text indexing. It acts as a standalone mail application, connecting directly to an IMAP server and creating a local index off of the contents. It has a simple ncurses interface so all interaction can take place on the command line. Digital Operatives is releasing the code using a version of the Creative Commons BY-NC-SA 3.0 license that has been modified slightly to be more applicable for software licensing. It is free for non-commercial use. The code is available here.

A couple years ago I wrote about some thought experiments related to copyright law. Let's say you have a huge number, $n$, two documents, $D_1$ & $D_2$, and a cipher, $f$, that, given the number and the first document, yields the second document: $f(n, D_1) = D_2$. Now let's say $D_1$ is some book in the public domain, whereas $D_2$ is a copyrighted book. In my previous article I wondered,

What is the copyright status of $n$? Can a number be copyrighted?

The difficulty arises in the fact that we have reconstructed a copyrighted artifact solely using artefacts in the public domain. I subsequently posed this scenario to some lawyers, and their opinion was that either the cipher $f$ would be in violation of $D_2$'s copyright, or the copyright status would depend on the intent of the cipher’s creator.

But what if, for the same number $n$, there is another pair of documents, $D_3$ & $D_4$ for which $f(n, D_3) = D_4$? What if $D_3$ and $D_4$ are both in the public domain? Is such an occurrence even possible? It turns out that it is, and it's probably more probable that you might think. Read on to find out.

I will be presenting a talk introducing distributed combinatorial optimization at the Streams Data Processing Workshop tomorrow. Here are the slides:

Handouts for the talk are also available, here.

There are a number of myths and misconceptions surrounding both the creation and consumption of sushi that I'd I think are important enough to devote an entire blog entry toward their clarification.

Proper sushi has a small amount of wasabi applied to the underside of the fish. Some people claim that "it is to prevent parasites" (via the natural anticeptic properties of the wasabi), but I find this explanation a bit dubious (I would think that the wasabi would have to be uniformly applied to the entire fish to have any measurable effect). The wasabi is really there to add flavor. In really high-end sushi restaurants in Japan, for example, it is relatively uncommon for the guest to be served a mound of grated wasabi; that's the sushi equivalent to serving an un-tossed caesar salad with the dressing on the side. Instead, the chef applies the perfect amount of wasabi to each piece of sushi. Some chefs will not even serve soy sauce, instead individually brushing on the sauce on each piece. If wasabi is served separately from the fish, it is generally also considered bad form to mix it with the soy sauce (as is commonly done in the US).

So, why might you have never seen this before at your local sushi joint?

- It takes more time/skill/effort to do it properly.

- Many sushi restaurants in the US do not have properly trained sushi chefs. In fact, in most areas of the country with little or no Japanese population, don't be surprised if your sushi chef is a Salvadorian who learned from the Oaxacan who learned from the Korean owner of the restaurant. Not that there's anything wrong with that; one of my favorite sushi places is run by an Indonesian. Just keep in mind that the people making you your sushi may have never experienced the "real thing" themselves.

- "Real," fresh wasabi is very rare and expensive. Most of the stuff that is used in the US is basically horseradish paste plus food coloring. Restaurants that can both procure and afford to use the real stuff will want to use it sparingly; they wouldn't want to waste it by putting a mound of it on each plate. Therefore, they might be more inclined to use the "proper" technique.

Here is a video in which you can see famous chef Naomichi Yasuda using wasabi inside the sushi. It all happens very quickly, but you can clearly see him dip into the bin of wasabi, rub it on the underside of the fish, and then apply the fish to the rice. Here is another video in which Anthony Bourdain explains the "rules" of high-end sushi eating, while dining at the super-famous and super-expensive Sukiyabashi Jiro (the proprietor of which is actually designated as a "living treasure" by the Japanese government). That second video shows the chef brushing on the soy sauce, and the general lack of both soy and wasabi on the plates.

And on to the matter of chopsticks. Some purists claim that you're not supposed to use them, instead using your hands. I've been to Japan, and it really varies by the type of establishment. Here is my take on it: I think it really depends on the formality of the restaurant. In the more formal places, they won't serve any separate wasabi or soy (only gari) and they'll give you a small moist towel (in addition to the oshibori) that you are supposed to use to clean your fingers between pieces of sushi, under the expectation that you will use your hands. If they don't give a towel to clean your fingers, then I think it is acceptable to use chopsticks.

When I say "high-end", I mean a place that specializes in just sushi. In Japan, these places are usually tiny, consisting of only a bar. Each sushi chef services at most 5 customers at a time. Each chef is supported by perhaps one apprentice (who might prepare some of the seafood) and several other apprentices in the kitchen whose primary job is to prepare the rice. Sushi is all about the rice, after all; an apprentice will spend the better part of the first decade of his training just learning how to cook the rice, before even touching any seafood. That's why most casual restaurants in Japan (e.g., izakaya) will forego even serving sushi, instead offering preparations that require less skill/training (e.g., sashimi).

Despite awkwardness caused by his recent rants on the topics of modern cooking techniques and more recently fandom, Alton Brown is still one of my favorite sources of culinary quotes. One of which, related to nutrition, is

There are no "bad foods", only bad food habits.

In keeping with my recent posts' unintentional gastronomic theme [1,2], I am going to dedicate this post to debunking a myth related to the above quote. I claim that there is no bad time to eat, only bad eating habits. Specifically, I'd like to dispell the common misconception that the time of one's meal alone can affect weight gain.

I recall hearing a story on NPR a year or two ago about a study which specifically tried to test the claim that eating late at night is unhealthy. The study concluded that there was absolutely no correlation between the proximity of mealtime to sleep and weight gain, other than the fact that people tend to choose to eat more unhealthy foods late at night. I can’t seem to find a reference to that study, however, so I am going to have to do some research myself. Right. Lets get stuck in.

The majority of our food is actually digested during sleep, so the common argument that “eating late at night is bad because our metabolism [slows or shuts down] during sleep” is incorrect. With that said, there is a correlation between night eating, low self-esteem, reduced daytime hunger, and weight loss among people who are already obese or prone to obesity, however, this correlation does not necessarily imply causation (i.e., the act of eating a late meal does not necessarily provoke these conditions). It may simply be the case that the types of foods that people prefer to eat late at night are less healthy. There is still much debate on the subject, however, many scientists agree that meal frequency, as opposed to time, is one of the best predictors for weight gain. For example, the time between meals is highly correlated to one’s waist size. This makes some intuitive sense, since eating more, smaller meals will help regulate insulin levels, and spikes in insulin levels (which can be caused by large meals and/or large gaps in time between meals) have been linked to weight gain.

A newer study followed the eating and sleeping patterns of 52 subjects over one week. They found a correlation between “late sleepers” (i.e., people who go to sleep late and wake up late) and high body mass index, and that eating after 8pm was associated with higher body mass index. A relatively recent New York Times article summarizing the results of the study makes the further claim that eating late at night leads to weight gain, however, I disagree with that claim on the grounds that correlation does not imply causation. In fact, the original study noted:

Late sleepers consumed more calories at dinner and after 8:00 PM, had higher fast food, full-calorie soda and lower fruit and vegetable consumption.

Therefore, I think the results of the study can be interpreted to mean that there is a correlation between eating/sleeping late and a poor diet.

Furthermore back in 2006, the same research team conducted a study on monkeys in which they were fed a diet similar to the average (i.e., high-fat) diet common in the USA. The only variable was the time of day that the monkeys were fed. With all else remaining constant, the researchers found no correlation between weight gain and time of feeding.

It was really interesting to see that the monkeys who ate most of their food at night were no more likely to gain weight than monkeys who rarely ate at night. This suggests that calories cause weight gain no matter when you eat them.

While I’m on a roll here, let me quickly dispel yet another myth. I know many people that adhere to the strict “calorie-in/calorie-out” nutritional theory. This seems particularly popular among mathy/engineering types. The idea is that if your body burns fewer calories than what it takes in through food then it will gain weight. This theory, in general, is fallacious. The body doesn’t necessarily consume all of the calories of the food we stick down our throats. The time between meals will, in effect, affect the way one’s body “decides” to digest the food. Furthermore, as this study and others like it suggest, not all types of calorie sources are equal when it comes to how the body processes them!

In conclusion, if you’re overweight, it’s not necessarily legitimate to blame your late-night eating schedule. You can’t even blame your high calorie diet (only the types of calories you choose to eat).

PoC‖GTFO

PoC‖GTFO Twitter

Twitter LinkedIn

LinkedIn GitHub

GitHub XTerm

XTerm

Esperanto

Esperanto

עברית

עברית

Medžuslovjansky

Medžuslovjansky

Русский

Русский