Recent Content:

Is it cheaper to eat fast food than to cook a meal from scratch? Answering such a question is difficult, given that costs will vary greatly from country to country. Why? Well, raw commodity prices are very volatile due to varying government subsidies, differences in climate, extreme climatic events, supply chains, &c.

The supermarket industry in the US is extremely competitive. SuperValu, for example, is a giant corporation that owns many supermarket chains. They are lucky to eek away a 1.5% profit margin. (In other words, at most 1.5% of their gross income is a profit.) That means they could lower their prices at most ~1.5% without taking a loss. Bulk sellers like Costco and even the giant Wal-Mart are lucky to reach 3%. Successful fast food restaurants like McDonalds, on the other hand, easily reach a profit margin of 20%. That means, in effect, McDonalds could reduce their prices by ~20% and still stay afloat.

Why is this? One major reason is that companies like McDonalds can and do have complete vertical integration of their supply chains: McDonalds raises their own cattle, grows their own potatoes, transports their own raw ingredients, and largely owns the real estate of their restaurants. In fact, McDonalds even makes a profit off of leasing their real estate to McDonalds franchises. That flexibility is partially one reason why a Big Mac will be worth the equivalent of US\$10 in Brazil while the same Big Mac will be worth US\$1.50 in Croatia. Supermarkets don’t really have much opportunity for vertical integration unless they actually buy and operate their farms and suppliers.

So, is eating fast food really cheaper? As I mentioned in my previous blog entry about food deserts, in the US there is a definitive link between poverty, obesity, and lack of proximity to a supermarket. There was also a study in the UK that discovered a correlation between the density of fast food restaurants and poverty:

Statistically significant positive associations were found between neighborhood deprivation [i.e., poverty] and the mean number of McDonald’s outlets per 1000 people for Scotland ($p < 0.001$), England ($p < 0.001$), and both countries combined ($p < 0.001$). These associations were broadly linear with greater mean numbers of outlets per 1000 people occurring as deprivation levels increased.

Let’s have some fun now and look at the Consumer Price Index (CPI), which estimates average expenditures for various goods over a month. The current CPI in the US for food and beverages is \$227.5 (meaning that the average consumer spends \$227.5 per month on food and beverages). Now let’s assume that the cost of non-fast-food is cheaper than eating fast food and proceed with a proof by contradiction. Under this assumption, the CPI of \$227.5 is obviously a lower bound on the amount one would spend if one only ate fast food (since the CPI includes all types of food-related expenditures). This equates to about \$8 per day. In 2008, the average price of a Big Mac in the US was \$3.57, and it is certainly possible for one to subsist off of two Big Macs per day. That’s especially true since a Big Mac is one of the more expensive items on the McDonalds menu. A McDouble, for example, costs only \$1. This is a contradiction, i.e., we have shown that it is in fact possible to live off of less than \$8 a day of fast food, thus breaking the lower bound and invalidating our assumption that eating non-fast-food is cheaper than eating fast food.∎

This suggests that it is at least plausible that eating fast food could be cheaper than grocery shopping in the US.

What is a food desert? That’s “desert” as in “Sahara,” not the sweet thing you eat at the end of a meal. According to Wikipedia, it's

any area in the industrialised world where healthy, affordable food is difficult to obtain,

specifically associated with low income. There is a good amount of debate about whether such things even exist. The problem, as I see it, is that this definition is very subjective: One can always have access to high quality or nutritional food if one is willing to spend enough time to travel to it. If a person lives a couple miles away from a grocery store but has "access" via expensive (to them) public transport, does that constitute "access"? Technically, of course, yes. But what I really think the question should be:

Is there any statistical correlation between proximity to full-service grocery stores, obesity, and poverty?

I think the answer to that is "yes". Answering why is a much more difficult (and perhaps open) question. Read on to hear my reasoning.

Pronunciation of foreign words in American vs. British English

In which my etymological conjectures are repudiated by Peter Shor.

One of the differences between modern US English (hereafter referred to as "American English") and British English is the way in which we pronounce foreign words, particularly those of French origin and/or related to food. For example, Americans…

- drop the "h" on "herb" and "Beethoven";

- rhyme "fillet" and "valet" with "parlay" as opposed to "skillet"; and

- pronounce foods like paella as /paɪˈeɪə/ (approximating a Castilian or Mexican accent), whereas the British pronounce it as /pʌɪˈɛlə/.

In general, the British seem to pronounce foreign/loan words as they would be phonetically pronounced if they were English, whereas Americans tend to approximate the original pronunciation. I've heard some people claim that this trend is due to the melting pot nature of America, and others claim that the French pronunciation, in particular, is due to America's very close relations with France during its infancy. This latter hypothesis, however, seems to be contradicted by the following:

Avoid the habit of employing French words in English conversation; it is in extremely bad taste to be always employing such expressions as ci-devant, soi-disant, en masse, couleur de rose, etc. Do not salute your acquaintances with bon jour, nor reply to every proposition, volontiers. In speaking of French cities and towns, it is a mark of refinement in education to pronounce them rigidly according to English rules of speech. Mr. Fox, the best French scholar, and one of the best bred men in England, always sounded the x in Bourdeaux, and the s in Calais, and on all occasions pronounced such names just as they are written.

I wondered: At what point did the USA drop the apparent British convention of pronouncing foreign words as they are spelled?

I asked this question on English.sx, to little fanfare; most people—including Peter Shor!!!1—had trouble accepting that this phenomena even exists. Therefore, I extend my question to the Blogosphere!

I did some more digging, and it is interesting to note that there seems to be a trend in "upper-class" (U) English to substitute words that have an obvious counterpart in French with words that are either of Germanic origin or those that do not have a direct equivalent in modern French. For example, in U English:

- scent is preferred over perfume;

- looking glass is preferable to mirror;

- false teeth is preferable to dentures;

- graveyard > cemetery;

- napkin > serviette;

- lavatory > toilet;

- drawing-room > lounge;

- bus > coach;

- stay > corset;

- jam > preserve;

- lunch > dinner (for a midday meal); and

- what? > pardon?

This is admittedly a stretch, but perhaps there is some connection between the US's lack (and some might say derision) of a noble class and its preference toward non-U/French pronunciation?

I think the claim that "no two snowflakes are alike" is fairly common. The idea is that there are so many possible configurations of snow crystals that it is improbable that any two flakes sitting next to each other would have the same configuration. I wanted to know: Is there any truth to this claim?

Kenneth Libbrecht, a physics professor at Caltech, thinks so, and makes the following argument:

Now when you look at a complex snow crystal, you can often pick out a hundred separate features if you look closely.

He goes on to explain that there are $10^{158}$ different configurations of those features. That's a 1 followed by 158 zeros, which is about $10^{70}$ times larger than the total number of atoms in the universe. Dr. Libbrecht concludes

Thus the number of ways to make a complex snow crystal is absolutely huge. And thus it's unlikely that any two complex snow crystals, out of all those made over the entire history of the planet, have ever looked completely alike.

Being the skeptic that I am, I decided to rigorously investigate the true probability of two snowflakes having possessed the same configuration over the entire history of the Earth. Read on to find out.

Last year I made a rather esoteric joke about a supposed phenomena called the "Ballmer Peak" that was popularized by a web comic. The idea is that alcohol, at low doses, can actually increase the productivity of a computer programmer. The original claim was obviously in jest since, among other reasons, Randall Munroe (the web comic's author) claimed that this peak occurs at exactly 0.1337% blood alcohol content. This got me thinking: Could there be any truth to this claim? Read on to find out; the results may surprise you.

Sir Robert Burnett

An investigation into the life of the patron saint of alcoholic graduate students.

Political scientist Ed Burmila—sole remaining contributor to one of my favorite weblogs on the Internets, Gin and Tacos—just asked his readership,

What brought you here initially? Was I suggested by one of your friends? Did you arrive from a link on a different site – especially Crooks & Liars? Random internet search? Internet search specifically for gin and/or tacos? Saw a sticker on someone's car? Wrote three words in the search bar, hit ctrl-Enter, and hoped for the best?

I have been reading Gin and Tacos for almost its entire, decade-long existence. I didn't mean for that to sound so hipsterish, but there's not much other way to put it. I remember when I first stumbled on the site having entered "Robert Burnett" in my search bar, back in the days when G&T.com had more in common with its name than simply being awesome. I, like Ed, was a poor graduate student at the time, and I too had discovered the siren call of Sir Robert Burnett's London Dry Gin. (Perhaps I inherited this penchant from my advisor.) I'm not sure about G&T.com's Robert Burnett fan fiction, though.

I was, however, intrigued by Ed's, et al., historical sleuthing in trying to track down the truth behind the real Robert Burnett. Unfortunately, here is all they were able to conclude:

- Robert Burnett Jr. and Sir Robert Burnett were active in politics, however neither were mayor of London.

- The Burnett family was very active in military recruitment.

- Most importantly, that the Burnett family dealt in liquors.

- Finally, Sir Robert Burnett had a pretty damn nice estate.

Unfortunately, none of my research resulted in specific reference to gin. This is primarily due to the fact that the only available source to me was the Times of London, although there might have been advertisements for Burnett’s Gin in the Times, they did not come through on the search. Someone with more experience in alcohol oriented history could possibly do better.

I was no expert in history, however, like most Ph.D. students, I was a world renowned expert in procrastination. I therefore took on the task. Read on to see my (now six year old) results.

Seven Degrees of Separation

In the year 2651 we will have to create the "Seven Degrees of Separation Game"!

Is it true that everyone on earth is separated by at most six degrees? There's plenty of empirical evidence to support this claim already, so I am going to take a different, more theoretical approach.

I’m currently teaching a class and receive all of the homework submissions digitally (in the form of PDFs). Printing out the submissions seems like a waste, so I devised a workflow for efficiently grading the assignments digitally by annotating the PDFs. My method relies solely on free, open-source tools.

Here’s the general process:

- Import the PDF into GIMP. GIMP will automatically create one layer of the image for each page of the PDF.

- Add an additional transparent layer on top of each page layer.

- Use the transparent layer to make grading annotations on the underlying page. One can progress through the pages simply by hiding the upper layers.

- Save the image as an XCF (i.e., GIMP format) file.

-

Use my

gimplayers2pngsscript (see below) to export the layers of the XCF file into independent PNG image files. - Use the composite function of ImageMagick to overlay the annotation layers with the underlying page layers, converting to an output PDF.

Below is the code for my xcflayers2pngs script. It is a Scheme Script-Fu script embedded in a Bash script that exports each layer of the XCF to a PNG file.

#!/bin/bash

{

cat < i 0)

(set! bottom-to-top (append bottom-to-top (cons (aref all-layers (- i 1)) '())))

(set! i (- i 1))

)

(reverse bottom-to-top)

)

)

(define (format-number base-string n min-length)

(let* (

(s (string-append base-string (number->string n)))

)

(if (< (string-length s) min-length)

(format-number (string-append base-string "0") n min-length)

s)))

(define (get-full-name outfile i)

(string-append outfile (format-number "" i 4) ".png")

)

(define (save-layers image layers outfile layer)

(let* (

(name (get-full-name outfile layer))

)

(file-png-save RUN-NONINTERACTIVE image (car layers) name name 0 9 1 1 1 1 1)

(if (> (length layers) 1)

(save-layers image (cdr layers) outfile (+ layer 1)))

))

(define (convert-xcf-to-png filename outfile)

(let* (

(image (car (gimp-file-load RUN-NONINTERACTIVE filename filename)))

(layers (get-all-layers image))

)

(save-layers image layers outfile 0)

(gimp-image-delete image)

)

)

(gimp-message-set-handler 1) ; Send all of the messages to STDOUT

EOF

echo "(convert-xcf-to-png \"$1\" \"$2\")"

echo "(gimp-quit 0)"

} | gimp -i -b -

Running xcflayers2pngs file.xcf output will create output0000.png, output0001.png, output0002.png, ..., one for each layer of file.xcf. Each even-numbered PNG file will correspond to an annotation layer, while each odd PNG file will correspond to a page of the submission. We can then zip the even pages with their associated odd pages using the following ImageMagick trickery:

convert output???[13579].png null: output???[02468].png -layers composite output.pdf

We can automate this process by creating a Makefile rule to convert a graded assignment in the form of an XCF into a PDF:

%.pdf : %.xcf

rm -f $**.png

./xcflayers2pngs $< $*

convert $*???[13579].png null: $*???[02468].png -layers composite $@

rm -f $**.png

Gender Representation on the Internet

In which I discover that male names appear much more often than female names on the Internet.

There is a lot that has happened since last August. I successfully defended my Ph.D., for one. I could give a report on our post-defense trip to Spain. I could talk about some interesting work I'm now doing. Instead, I'm going to devote this blog entry to gender inequity.

This all started in November of last year in response to one of Dave's blog posts. Long-story-short, he was blogging about a girl he had met; in an effort to conceal her identity (lest she discover the blog entry about herself), he replaced her name with its MD5 hash. Curious, I decided to brute force the hash to retrieve her actual name. This was very simple in Perl:

#!/usr/bin/perl -w

use Digest::MD5 qw(md5_hex);

my $s = $ARGV[0] or die("Usage: crackname MD5SUM\n\n");

system("wget http://www.census.gov/genealogy/names/dist.female.first") unless(-e 'dist.female.first');

open(NAMES, 'dist.female.first') or die("Error opening dist.female.first for reading!\n");

while() {

if($_ =~ m/^\s*(\w+)/) {

my $name = lc($1);

if(md5_hex(ucfirst($name)) eq $s || md5_hex($name) eq $s ||

md5_hex(ucfirst($name) . "\n") eq $s || md5_hex($name . "\n") eq $s) {

print ucfirst($name) . "\n";

exit(0);

}

}

}

close(NAMES);

exit(1);

Note that I am using a file called dist.female.first, which is freely available from the US Census Bureau. This file contains the most common female first names in the United States, sorted by popularity, according to the most recent census.

This script was able to crack Dave's MD5 hash in about 10 milliseconds.

This got me thinking: For what else could this census data be used?

My first idea was also inspired by Dave. You see, he was writing a novel at the time. Wouldn't it be great if I could create a tool to automatically generate plausible character names for stories?

#!/usr/bin/perl -w

use Cwd 'abs_path';

use File::Basename;

my($scriptfile, $scriptdir) = fileparse(abs_path($0));

my $prob;

$prob = $ARGV[0] or $prob = rand();

system("cd $scriptdir ; wget http://www.census.gov/genealogy/names/dist.all.last") unless(-e $scriptdir . 'dist.all.last');

system("cd $scriptdir ; wget http://www.census.gov/genealogy/names/dist.male.first") unless(-e $scriptdir . 'dist.male.first');

system("cd $scriptdir ; wget http://www.census.gov/genealogy/names/dist.female.first") unless(-e $scriptdir . 'dist.female.first');

sub get_rand {

my($filename, $percent) = @_;

open(NAMES, $filename) or die("Error opening $filename for reading!\n");

$percent *= 100.0;

my $nameval = -1;

my @names;

my $lastname;

while() {

if($_ =~ m/^\s*(\w+)\s+([^\s]+)\s+([^\s]+)/) {

$lastname = ucfirst(lc($1));

if($3 >= $percent) {

last if($nameval >= $percent && $3 > $nameval);

$nameval = $3;

push(@names, $lastname);

}

}

}

close(NAMES);

return $lastname if($#names < 0);

return $names[int(rand($#names + 1))];

}

sub random_name {

my($male, $p) = @_;

my $firstnameprob;

my $lastnameprob;

do {

$firstnameprob = rand($p);

$lastnameprob = $p - $firstnameprob;

} while($lastnameprob > 1.0);

return &get_rand($male ? 'dist.male.first' : 'dist.female.first', $firstnameprob) . " " . &get_rand('dist.all.last', $lastnameprob);

}

sub flushall {

my $old_fh = select(STDERR);

$| = 1;

select(STDOUT);

$| = 1;

select($old_fh);

}

print STDERR "Male: ";

&flushall();

print &random_name(1, $prob) . "\t";

&flushall();

print STDERR "\nFemale: ";

&flushall();

print &random_name(0, $prob) . "\n";

This script does just that. Given a real number between 0 and 1 representing the scarcity of the name, this script randomly generates a name according to the distribution of names in the United States according to the census. Values closer to zero produce more common names, and values closer to one produce more rare names. The parameter can be thought of as the scarcity percentile of the name; a value of $x$ means that the name is less common than $x$% of the other names. Note, though, that I'm not actually calculating the joint probability distribution between first and last names (for efficiency reasons), so the value you input doesn't necessarily correlate to the probability that a given first/last name combination occurs in the US population.

$ ./randomname 0.0000001 Male: James Smith Female: Mary Smith $ ./randomname 0.5 Male: Robert Shepard Female: Shannon Jones $ ./randomname 0.99999 Male: Kendall Narvaiz Female: Roxanne Lambetr

The "Male" and "Female" portions are actually printed to STDERR. This allows you to use this in scripting without having to parse the output:

$ ./randomname 0.75 2>/dev/null Gerald Castillo Christine Aaron

But I didn't stop there. Here's the punchline of this Shandy-esque recount:

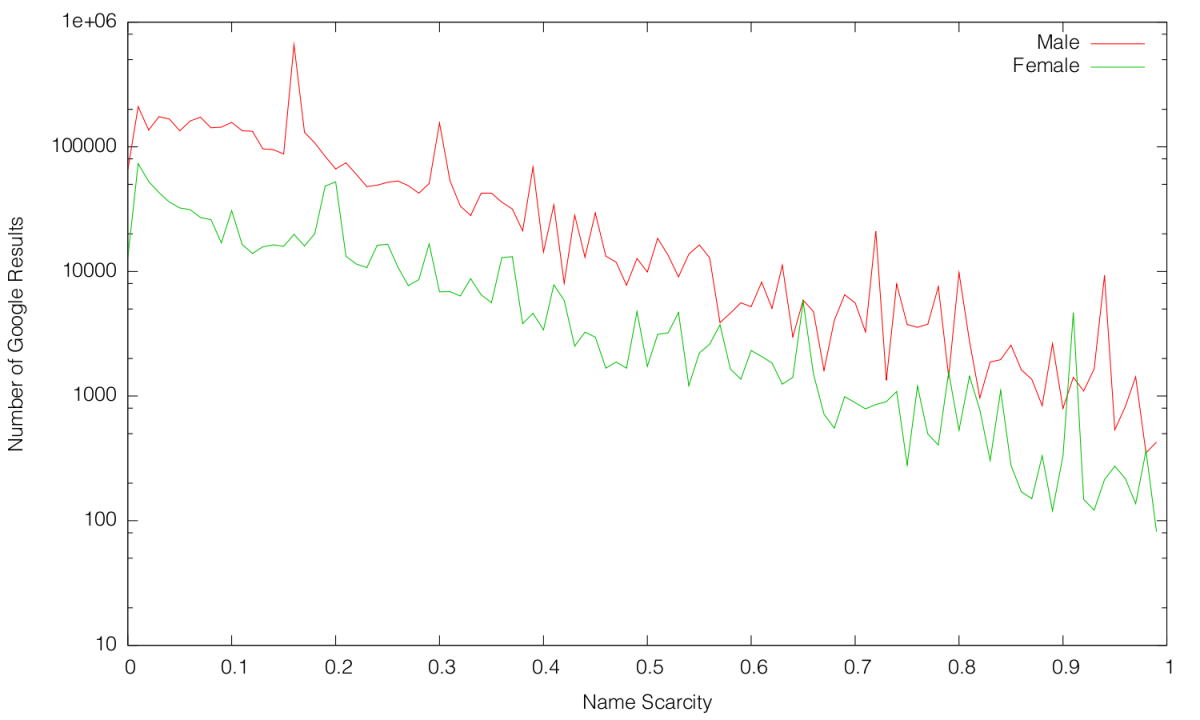

Inspired by Randall Munroe style Google result frequency charts, I became interested in seeing how the frequency of names in the US correlates to the frequency of names on the Internet. I therefore quickly patched my script to retrieve Google search query result counts using the Google Search API. I generated 60 random names (half male, half female) for increasing scarcity values (in increments of 0.01). The results are pretty surprising:

Note that the $y$-axis is on a logarithmic scale.

As expected, the number of Google search results is exponentially correlated to the scarcity of the name. What is unexpected is the disparity between representation of male names on the Internet versus female names on the Internet. On average, a male name of a certain scarcity will have over 6.6 times more Google results than a female name of equal scarcity!

PoC‖GTFO

PoC‖GTFO Twitter

Twitter LinkedIn

LinkedIn GitHub

GitHub XTerm

XTerm

Esperanto

Esperanto

עברית

עברית

Medžuslovjansky

Medžuslovjansky

Русский

Русский